[ad_1]

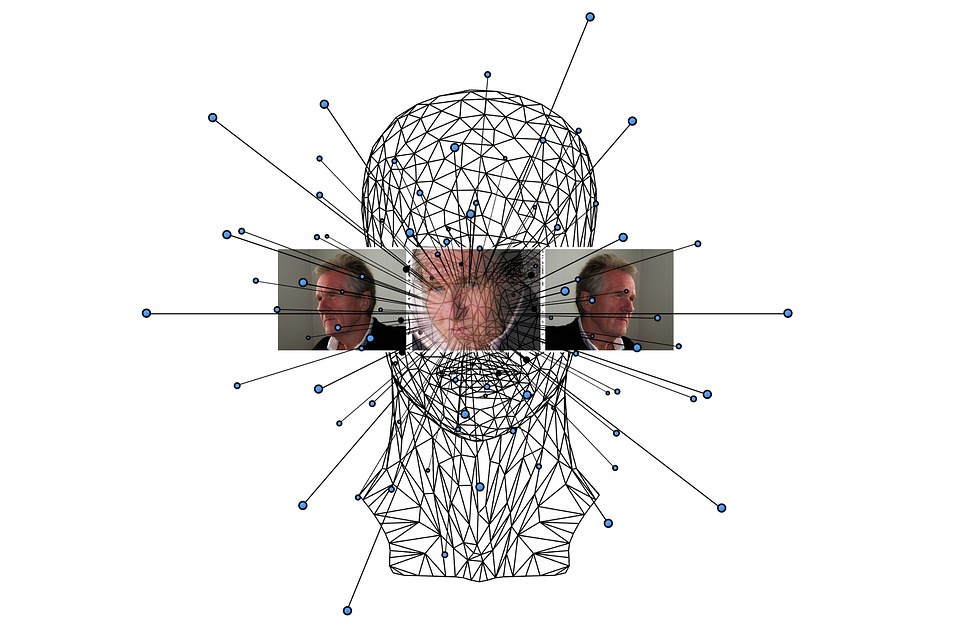

Artificial Intelligence (AI) is rapidly advancing and becoming increasingly integrated into various aspects of our lives, including decision-making processes. While AI offers numerous benefits such as increased efficiency and accuracy, it also raises important ethical considerations. The use of AI in decision-making brings forth a range of complex dilemmas that need to be carefully examined and navigated. In this article, we will explore the ethical implications of using AI in decision-making and delve into the challenges and considerations associated with this rapidly evolving technology.

The Role of AI in Decision-Making

AI has the capacity to analyze vast amounts of data, identify patterns, and make predictions or recommendations based on this information. This makes it an invaluable tool in decision-making processes across various domains, including healthcare, finance, criminal justice, and more. However, the use of AI in decision-making raises ethical concerns, particularly regarding transparency, accountability, and fairness.

Transparency and Accountability

One of the key ethical dilemmas of using AI in decision-making is the lack of transparency in how these decisions are reached. AI algorithms can be highly complex, making it challenging for individuals to understand the reasoning behind a particular decision. This lack of transparency can make it difficult to hold AI systems accountable for their actions, potentially leading to unjust or biased outcomes.

Fairness and Bias

AI systems are only as unbiased as the data they are trained on. If the training data is biased or flawed, the AI system may perpetuate and amplify these biases, leading to discriminatory outcomes. This raises significant ethical concerns, particularly in high-stakes decision-making scenarios such as loan approvals, hiring processes, and criminal sentencing. Ensuring fairness and mitigating bias in AI decision-making is a crucial ethical imperative.

Privacy and Consent

AI often relies on large volumes of personal data to make informed decisions. This raises important ethical considerations regarding privacy and consent. When individuals’ data is used to inform AI-driven decisions, it is essential to ensure that their privacy rights are respected, and that they have given informed consent for the use of their data in this context. Failing to uphold these principles can lead to egregious violations of individuals’ privacy rights.

Impact on Human Autonomy

As AI becomes more prevalent in decision-making processes, there is a growing concern about its impact on human autonomy. When decisions that significantly affect individuals’ lives are made by AI systems, it raises questions about the erosion of human agency and the delegation of critical choices to non-human entities. Balancing the benefits of AI-driven efficiency with the preservation of human autonomy is a key ethical consideration.

The Need for Ethical Frameworks

Given the complex ethical dilemmas associated with using AI in decision-making, there is a pressing need for robust ethical frameworks and guidelines to govern its development and deployment. These frameworks should prioritize transparency, fairness, accountability, and the protection of individuals’ rights. Additionally, interdisciplinary collaboration involving ethicists, technologists, policymakers, and other stakeholders is essential in shaping responsible AI practices.

Case Study: AI in Criminal Justice

One notable example of the ethical dilemmas surrounding AI in decision-making can be found in the criminal justice system. Various jurisdictions have implemented AI algorithms to assist in bail, parole, and sentencing decisions. However, concerns have been raised about the potential biases inherent in these systems, as well as the lack of transparency and accountability in how these decisions are reached. This has sparked important debates about the ethical implications of relying on AI in contexts where individual freedoms and rights are at stake.

FAQs

Q: What are some potential consequences of biased AI decision-making?

A: Biased AI decision-making can lead to unfair treatment of individuals, perpetuate systemic discrimination, and undermine trust in decision-making processes. In scenarios such as hiring and lending, biased AI systems can have far-reaching societal impacts, further entrenching disparities and inequities.

Q: How can we address the lack of transparency in AI decision-making?

A: Addressing the lack of transparency in AI decision-making requires measures such as algorithmic accountability, independent audits of AI systems, and the development of explainable AI techniques. It is essential to ensure that individuals are able to understand the basis for AI-driven decisions and hold these systems accountable for their outcomes.

Conclusion

The use of AI in decision-making presents complex and multifaceted ethical challenges that necessitate thoughtful consideration and proactive ethical governance. As AI continues to shape various aspects of society, addressing the ethical dilemmas it poses is paramount. By prioritizing transparency, fairness, privacy, and human autonomy, we can work towards harnessing the potential of AI in decision-making while upholding ethical principles and promoting just and equitable outcomes.

[ad_2]