[ad_1]

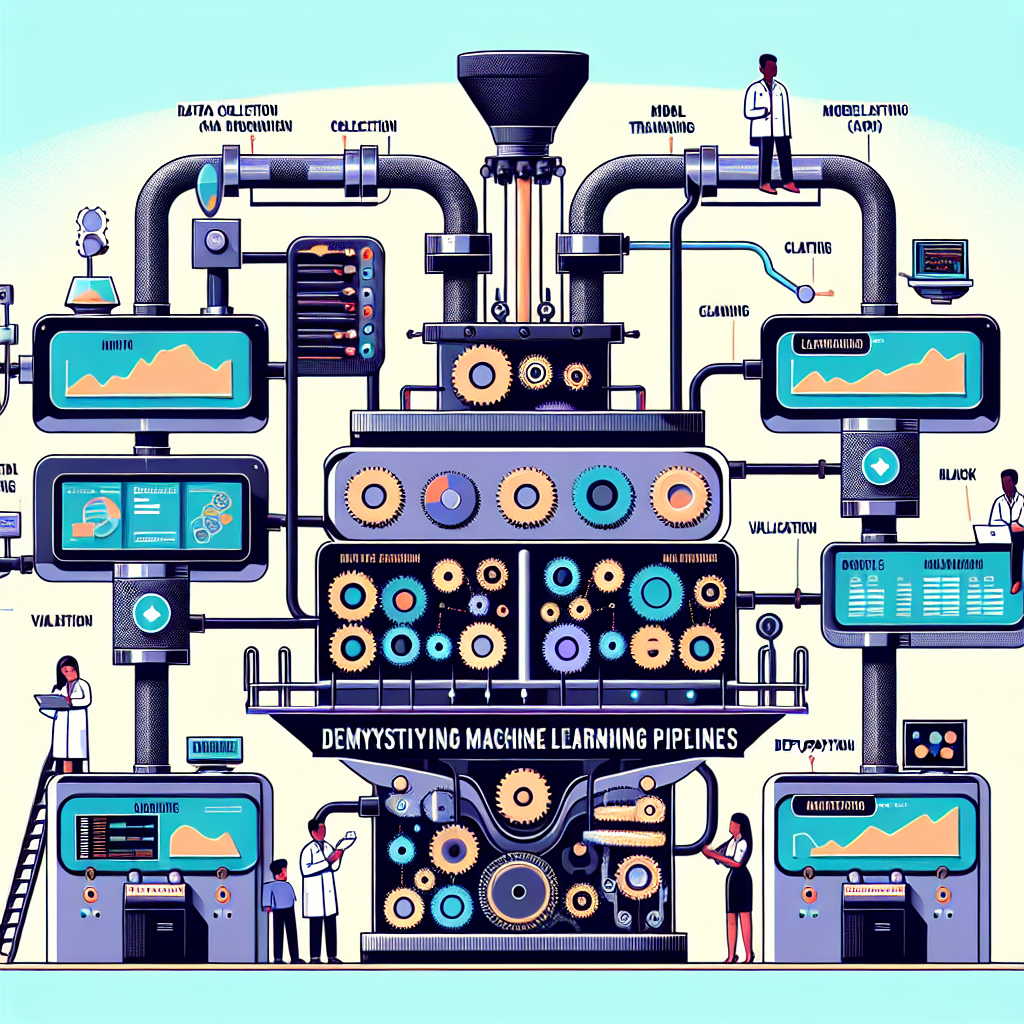

Machine learning pipelines have become an essential component of modern data science. They provide a systematic and scalable approach to building and deploying machine learning models, allowing data scientists and engineers to efficiently experiment with different algorithms, feature engineering techniques, and model architectures. In this article, we will demystify machine learning pipelines and provide an in-depth understanding of what they are, how they work, and why they are important.

What is a Machine Learning Pipeline?

A machine learning pipeline is a sequence of data processing components that are applied in a specific order to transform raw data into a predictive model. These components typically include data preprocessing, feature engineering, model training, model evaluation, and model deployment. Each component in the pipeline takes input data, performs some transformation, and produces output data that is passed to the next component. The goal of a machine learning pipeline is to automate and streamline the process of building and deploying machine learning models, making it easier for data scientists and engineers to iterate on different approaches and improve model performance.

How Does a Machine Learning Pipeline Work?

Machine learning pipelines are designed to handle the entire lifecycle of a machine learning project, from data ingestion to model deployment. The pipeline is typically organized into a series of stages, with each stage representing a specific task or activity. Data is passed through each stage in the pipeline, and the output of one stage becomes the input to the next stage. This allows for seamless integration of different components and ensures that the entire process is automated and repeatable.

At a high level, a typical machine learning pipeline will include the following stages:

- Data Ingestion: The first stage of the pipeline involves collecting and loading data from various sources, such as databases, files, or APIs.

- Data Preprocessing: In this stage, the raw data is cleaned, transformed, and formatted to make it suitable for machine learning algorithms. This may involve handling missing values, encoding categorical variables, scaling numerical features, and splitting the data into training and testing sets.

- Feature Engineering: This stage focuses on creating new features or transforming existing features to improve the predictive power of the model. This may include techniques such as one-hot encoding, feature scaling, dimensionality reduction, and text or image processing.

- Model Training: Here, machine learning algorithms are applied to the preprocessed data to learn patterns and relationships between input and output variables. This may involve training multiple models and tuning hyperparameters to find the best performing model.

- Model Evaluation: The trained models are evaluated using metrics such as accuracy, precision, recall, and F1 score to assess their performance on unseen data. This stage helps in identifying the best model for deployment.

- Model Deployment: The final stage of the pipeline involves deploying the trained model into a production environment, where it can make predictions on new data in real-time.

Why Are Machine Learning Pipelines Important?

Machine learning pipelines offer several benefits that make them an essential tool for data scientists and engineers:

- Automation: Pipelines automate the entire process of building and deploying machine learning models, saving time and effort for data scientists and engineers.

- Reproducibility: By organizing the entire workflow into a series of stages, pipelines make it easy to reproduce and modify experiments, ensuring that results are consistent and reliable.

- Scalability: Pipelines are scalable and can handle large volumes of data, making them suitable for enterprise-level machine learning projects.

- Experimentation: Pipelines allow for easy experimentation with different algorithms, feature engineering techniques, and model architectures, enabling data scientists to iterate on different approaches and improve model performance.

Conclusion

Machine learning pipelines are a foundational tool for modern data science, providing a systematic and scalable approach to building and deploying machine learning models. By automating the process of data processing, feature engineering, model training, and model deployment, pipelines enable data scientists and engineers to efficiently experiment with different approaches and improve model performance. Understanding the components and stages of a machine learning pipeline is essential for anyone working in the field of data science, as it empowers them to build and deploy machine learning models effectively and efficiently.

FAQs

What are some popular tools for building machine learning pipelines?

There are several popular tools and libraries that can be used to build machine learning pipelines, including Apache Airflow, Kubeflow, TensorFlow Extended (TFX), and Apache Beam. These tools provide a set of reusable components for building end-to-end machine learning workflows, making it easier to develop, test, and deploy machine learning models at scale.

What are some best practices for designing and managing machine learning pipelines?

Some best practices for designing and managing machine learning pipelines include modularizing components, versioning data and code, monitoring and logging pipeline runs, and automating pipeline execution. It is also important to document the pipeline architecture and keep the pipeline codebase clean and maintainable.

How can machine learning pipelines be integrated with cloud services?

Machine learning pipelines can be integrated with cloud services such as Google Cloud Platform, AWS, and Azure to leverage cloud-based storage, computing, and machine learning infrastructure. This enables seamless scaling, monitoring, and deployment of machine learning pipelines in a cloud environment, making it easier to manage large-scale machine learning projects.

[ad_2]