[ad_1]

In today’s data-driven world, companies and organizations are constantly dealing with vast amounts of data. Whether it’s customer information, sales figures, or market trends, the ability to process and analyze data efficiently is crucial for making informed decisions and gaining a competitive edge. Machine learning pipelines have emerged as a powerful tool for streamlining the data processing workflow, enabling organizations to automate and optimize their data processing tasks.

What are Machine Learning Pipelines?

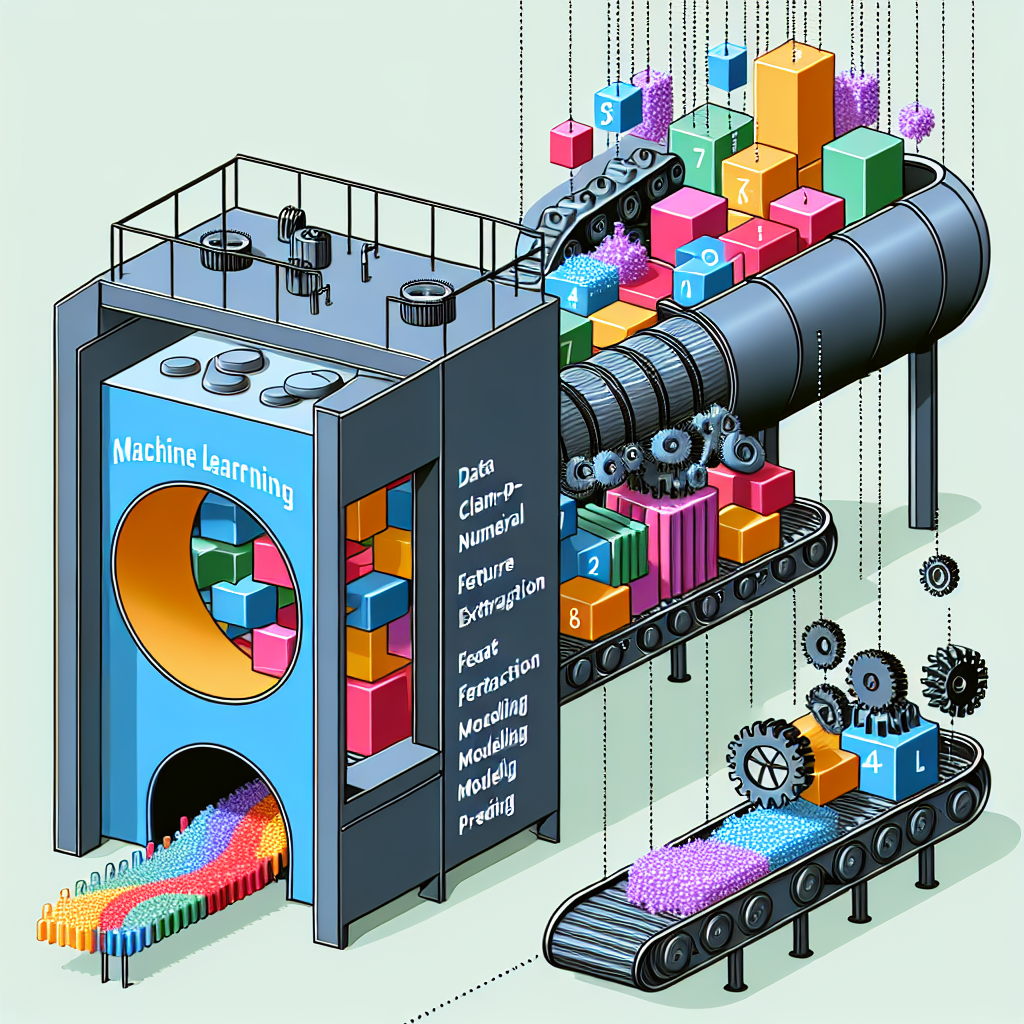

Machine learning pipelines are a series of interconnected data processing elements that combine to implement a machine learning workflow. These pipelines are designed to automate and streamline the process of preparing, training, deploying, and managing machine learning models. By organizing the different stages of the machine learning process into a pipeline, organizations can simplify and accelerate the development and deployment of machine learning solutions.

Benefits of Machine Learning Pipelines

There are several benefits to using machine learning pipelines for data processing:

- Automation: Machine learning pipelines automate the process of data preparation, model training, and model deployment, reducing the need for manual intervention and streamlining the workflow.

- Reproducibility: By encapsulating the entire machine learning workflow in a pipeline, organizations can easily reproduce and replicate their data processing and modeling tasks.

- Scalability: Machine learning pipelines can be scaled to handle large volumes of data, making them suitable for processing and analyzing big data.

- Optimization: Pipelines enable organizations to optimize their machine learning tasks by fine-tuning different components and parameters of the workflow.

Components of a Machine Learning Pipeline

A typical machine learning pipeline consists of several key components:

- Data Ingestion: The first stage of the pipeline involves ingesting and collecting the raw data from various sources, such as databases, data lakes, or external APIs.

- Data Preprocessing: Once the data is collected, it needs to be preprocessed and cleaned to ensure that it is suitable for training machine learning models.

- Feature Engineering: In this stage, the pipeline generates new features or transforms existing features to improve the performance of the machine learning models.

- Model Training: The pipeline then trains and fine-tunes machine learning models using the preprocessed data and the engineered features.

- Model Evaluation: After training, the models are evaluated using metrics such as accuracy, precision, recall, and F1 score to assess their performance.

- Model Deployment: Once a model is deemed satisfactory, it is deployed into a production environment for use in real-world applications.

Challenges in Data Processing

While machine learning pipelines offer significant benefits, organizations may face several challenges when implementing and managing these pipelines:

- Data Quality: Ensuring the quality and reliability of the data is crucial for the success of machine learning pipelines. Poor data quality can lead to inaccurate and unreliable models.

- Computational Resources: Training and deploying machine learning models often require significant computational resources, which can be a barrier for organizations with limited infrastructure.

- Model Maintenance: Once deployed, machine learning models need to be regularly maintained and updated to ensure their continued performance and relevance.

- Integration with Existing Systems: Integrating machine learning pipelines with existing data processing systems and workflows can be a complex task, requiring careful planning and coordination.

Best Practices for Streamlining Data Processing with Machine Learning Pipelines

Despite these challenges, there are several best practices that organizations can follow to streamline their data processing tasks using machine learning pipelines:

- Invest in Data Quality: Prioritize the quality and integrity of your data to ensure that your machine learning models produce accurate and reliable results.

- Optimize Computational Resources: Consider using cloud-based or distributed computing platforms to scale your machine learning pipelines and effectively utilize computational resources.

- Establish Model Monitoring and Maintenance Processes: Implement regular monitoring and maintenance processes to track the performance of deployed models and update them as needed.

- Collaborate Across Teams: Foster collaboration and communication between data scientists, engineers, and domain experts to ensure that machine learning pipelines are aligned with business objectives and requirements.

- Automate Where Possible: Identify opportunities for automation within your data processing workflow to reduce manual effort and improve efficiency.

Conclusion

Machine learning pipelines offer a powerful and effective way to streamline data processing tasks, enabling organizations to automate, optimize, and scale their machine learning workflows. By implementing machine learning pipelines and following best practices, organizations can overcome the challenges of data processing and harness the full potential of their data assets.

FAQs

What is a machine learning pipeline?

A machine learning pipeline is a series of interconnected data processing elements that combine to implement a machine learning workflow. These pipelines automate and streamline the process of preparing, training, deploying, and managing machine learning models.

What are the benefits of using machine learning pipelines for data processing?

Machine learning pipelines offer several benefits, including automation, reproducibility, scalability, and optimization of the machine learning workflow.

What are some of the challenges in data processing with machine learning pipelines?

Organizations may face challenges related to data quality, computational resources, model maintenance, and integration with existing systems when implementing and managing machine learning pipelines.

What are some best practices for streamlining data processing with machine learning pipelines?

Best practices for streamlining data processing with machine learning pipelines include investing in data quality, optimizing computational resources, establishing model monitoring and maintenance processes, collaborating across teams, and automating where possible.

[ad_2]